Tits or GTFO: The Aggressive Architecture of the Internet

Alison Harvey / University of Leicester

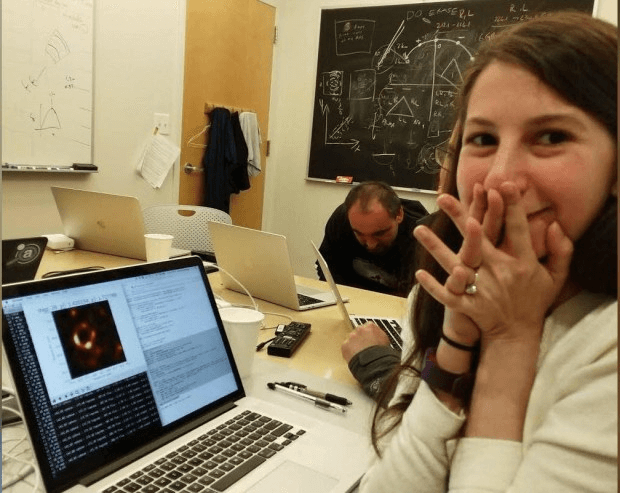

The breaking news on April 10, 2019 was the unveiling of the first photo of a black hole ever created, but within a few short days reporting shifted to focus on the consequences of this story for the young female fellow who contributed a significant algorithm to the collaboration. Katie Bouman, who was frequently used as the face of the Event Horizon Telescope project in media stories, took to social media to situate her work within the international scope of the collaboration. Still she became the target of vitriolic sexist and misogynist harassment focused on discrediting and diminishing her input into the project.

As The Guardian article reporting on the attacks notes, such practices are “par for the course for women“, a now-familiar pattern of toxic speech, doxxing, account spamming, and assorted hatred documented across many domains. This includes politics, journalism, and popular culture, and is particularly intense for women of colour. GamerGate, the coordinated campaign of harassment against visible and vocal women, LGBTQ individuals, and people of color in games, was perhaps the moment where these tactics of exclusion garnered mainstream media attention, but as Emma Jane [1] notes there is a long history of incivility online that has not been adequately documented.

This is partly because the mediation of misogyny, racism, and hate is easily dismissed as the work of ‘trolls’, anonymous online entities easily distinguished and distanced from one’s colleagues, friends, and family members. Digital culture scholarship has begun to address the gaps identified by Jane, with researchers including Adrienne Massanari [2] and Caitlin Lawson [3] exploring the persistence and potency of online hate and incivility from the perspective of platform politics. This work draws from Tarleton Gillespie’s [4] foundational intervention into discourses celebrating the neutrality and progressiveness of online intermediaries such as YouTube via recourse to the metaphor of ‘platform’. In tandem with a growing body of research into networked media infrastructures [5], these analyses highlight the importance of considering the interplay of the social and the technical when examining online norms and practices, as networked communication technologies both shape and are shaped by economic and political interests as well as ideological values.

These perspectives are informed by science and technology studies, which provides a critical vocabulary for understanding the politics of technologies that captures the mutually shaping relationship between social actors and technological systems, including within media culture. In ‘Do Artifacts Have Politics?’, Langdon Winner observes that technologies can embody political properties in two ways, as “forms of order” or “inherently political technologies” [6]. Whereas the latter indicates systems ordaining particular political relationships, the former refers to technologies that “favor certain social interests” with “some people…bound to receive a better hand than others” [7]. When the harassment of women, people of colour, and LGBTQ groups online becomes normalized as part of everyday life, it becomes clear that the deck is not stacked in their favour in the design, governance, or regulation of networked media technologies.

Massanari’s research into Reddit and Lawson’s multiplatform analysis indicate that there are designed elements of online spaces enabling the generation and circulation of toxic speech and harassing activity just as much as the celebrated practices of information seeking, community building, and social play. Increasingly, blue-sky perspectives about the promises of digital life are tested by research on in-built technological biases, such as Safiya Noble’s [8] damning revelations of Google’s racist algorithms and Katherine Cross’ [9] work on structures supporting toxicity in online games. In their challenges to long-held notions of freedom, democratization, and progress associated with digital environments, such critiques are met with ferocious defences of freedom of speech in particular. This is but one example of the social norms framing these technologies and practices around them, for as Danielle Citron [10] notes civil rights legislation is an equally applicable legal framework to deal with abuse though is it rarely invoked, to the detriment of women and young people specifically.

When taken on the whole, growing evidence suggests that the designed technological systems and artefacts constituting our digital life serve to discriminate, marginalize, and exclude some publics in an increasingly naturalized but largely invisible fashion. This is neither intended nor deterministic as online feminist and anti-racist activism indicates, and of course the Internet is not the origin or sole site of hate or discrimination in everyday life. However, inattention and indifference to how participation may be inhibited by designed affordances and functionality, likely due to how these interactions are linked to platform profitability, is what makes the Internet’s built environment one that is increasingly an ‘aggressive architecture’.

This concept is derived from critical architecture and urban studies, which tackles those Othered by invisible systems through the rise of what is called ‘defensive’ or ‘hostile’ architecture [11] aimed at discouraging or deterring certain kinds of activity in public spaces. For example, spikes as well as specially designed benches in outdoor spaces are implemented by local councils to deter homeless people from lying down. These forms of silent, repellent design are documented as examples of ‘unpleasant design‘, a moniker indicating how such structural obstructions pervert the ideals of architecture- to design spaces that improve people’s lives. For this reason, it is more accurate to refer to these types of hostile innovations within built environments not as defensive but aggressive, as they invisibly enshrine a desirable public and systematically disadvantage those not valued within this vision.

While it would be fallacious to suggest that an architecture built on open communication is inherently hostile, I argue that ‘active inactivity’ in dealing with toxic and hateful speech and action in the regulation of these sites is what becomes aggressive architecture as the concerns, needs, and well-being of publics continue to go unaddressed despite their visibility. The value of this framing is that it highlights the necessity of social-technical approaches and analysis to account for the political nature of designed spaces of digital life, enabling a broad view of these exclusions as structural, systemic, and interlocking rather than about individual high-profile cases or specific sites.

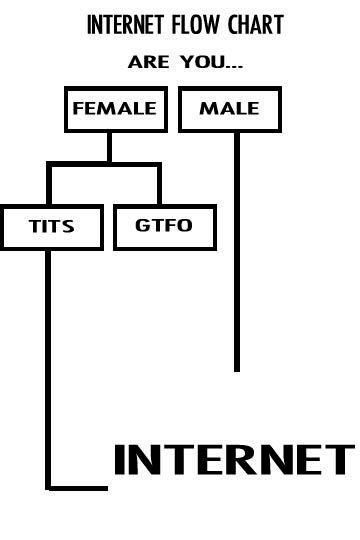

For instance, digital gaming and anti-social networks such as 4chan have long been infamous for incivility towards women, LGBTQ individuals, and people of colour, generating memes such as ‘Tits or GTFO’ that encapsulate how some are not acknowledged as legitimate or credible publics within these spaces. As this kind of harassment, hate, and abuse spreads more broadly across the Internet, socio-technical approaches that tackle the systems and artefacts enabling this become vitally necessary. Fortunately, thinking about aggressive architecture equally affords opportunities for addressing constraints and designing for a more equitable, safe, and inclusive online environment, important and exciting work that has already begun with various projects engaging with the vision of a feminist Internet. Social justice-oriented work into networked technologies, considering the injustices embedded in digital data and design as well as more consentful approaches to technology provide innovative socio-technical approaches to dealing with exploitation and exclusion. Support and adoption of these principles and tactics is urgently needed, and so too is resistance against the growing naturalization of harassment as part and parcel of engaging in everyday life.

Image Credits:

1. Katie Bouman

2. Zoe Quinn

3. League of Legends and toxicity (author’s screen grab)

4. Camden Bench

5. Tits or GTFO meme

Please feel free to comment.

- Jane, E. 2014. “‘You’re a Ugly, Whorish, Slut’: Understanding E-Bile.” Feminist Media Studies 14(4): 531-546. [↩]

- Massanari, A. 2017. “#GamerGate and The Fappening: How Reddit’s Algorithm, Governance, and Culture Support Toxic Technocultures.” New Media & Society 19(3): 329-346. [↩]

- Lawson, C. 2018. “Platform vulnerabilities: harassment and misogynoir in the digital attack on Leslie Jones.” Information, Communication & Society 21(6): 818-833. [↩]

- Gillespie, T.L. 2010. “The Politics of Platforms.” New Media & Society 12(3): 347-364. [↩]

- Parks L. & Starosielski, N. (Eds.) 2015. Signal Traffic: Critical Studies of Media Infrastructures. Urbana: University of Illinois Press. [↩]

- Winner, L. 1980. “Do Artifacts Have Politics?” Daedalus 10 (1): 121-136, 123 [↩]

- Winner, 125-126 [↩]

- Noble, S. 2018. Algorithms of Oppression: How Search Engines Reinforce Racism. New York: New York University Press. [↩]

- Cross, K. 2014. “Ethics for Cyborgs: On Real Harassment in an ‘Unreal’ Place.” Loading… The Journal of the Canadian Game Studies Association 8(13): 4-21. [↩]

- Citron, D.K. 2014. Hate Crimes in Cyberspace. Cambridge: Harvard University Press. [↩]

- Petty, J. 2016. “The London spikes controversy: Homelessness, urban securitization and the question of ‘hostile architecture’.” International Journal for Crime, Justice and Social Democracy 5(1): 67- 81. [↩]

Pingback: Algwhoreithms: Why Social Media Censor Women Over Hate Speech - Blogger On Pole